By: Kiefer Read

Introduction

With the increasing popularity of AI in a variety of commercial services, AI vulnerabilities and exploits will also increase. One such exploit called Morris II was developed by Ben Nassi from Cornell Tech, Stav Cohen from the Israel Institute of Technology, and Ron Bitton from Intuit as a zero-click exploit to abuse AI email assistants and steal data, send messages from infected accounts, install malware, and spread through email to other susceptible systems. Notably, the payloads used did not inject code directly, but rather used prompts embedded in emails to have the AI assistants execute code for them.

AI Email Assistants

AI email assistants are a relatively inexpensive service that has become available with the rise of LLMs. These systems are easily capable of helping write emails with generative prompts but are also capable of more advanced features such as sentiment analysis, automatic categorization of emails based on topics, or email summaries. Additionally, they can pull data out of your inbox and store it in a more organized fashion, such as compiling an address book out of phone numbers sent in emails or allocating project files to the correct folder. Particularly busy users can even have the assistants automatically reply to emails, though this is most likely best suited to a business-scale use case.

These services are extremely accessible, with prices starting as low as 7 cents per day, or $24 per year. Even more heavily featured services can be acquired for $30 per month, which while steep in comparison is still very affordable. Virtually anyone who wants these services can use them, with the assumption that the software has sufficient security.

Morris II Exploit

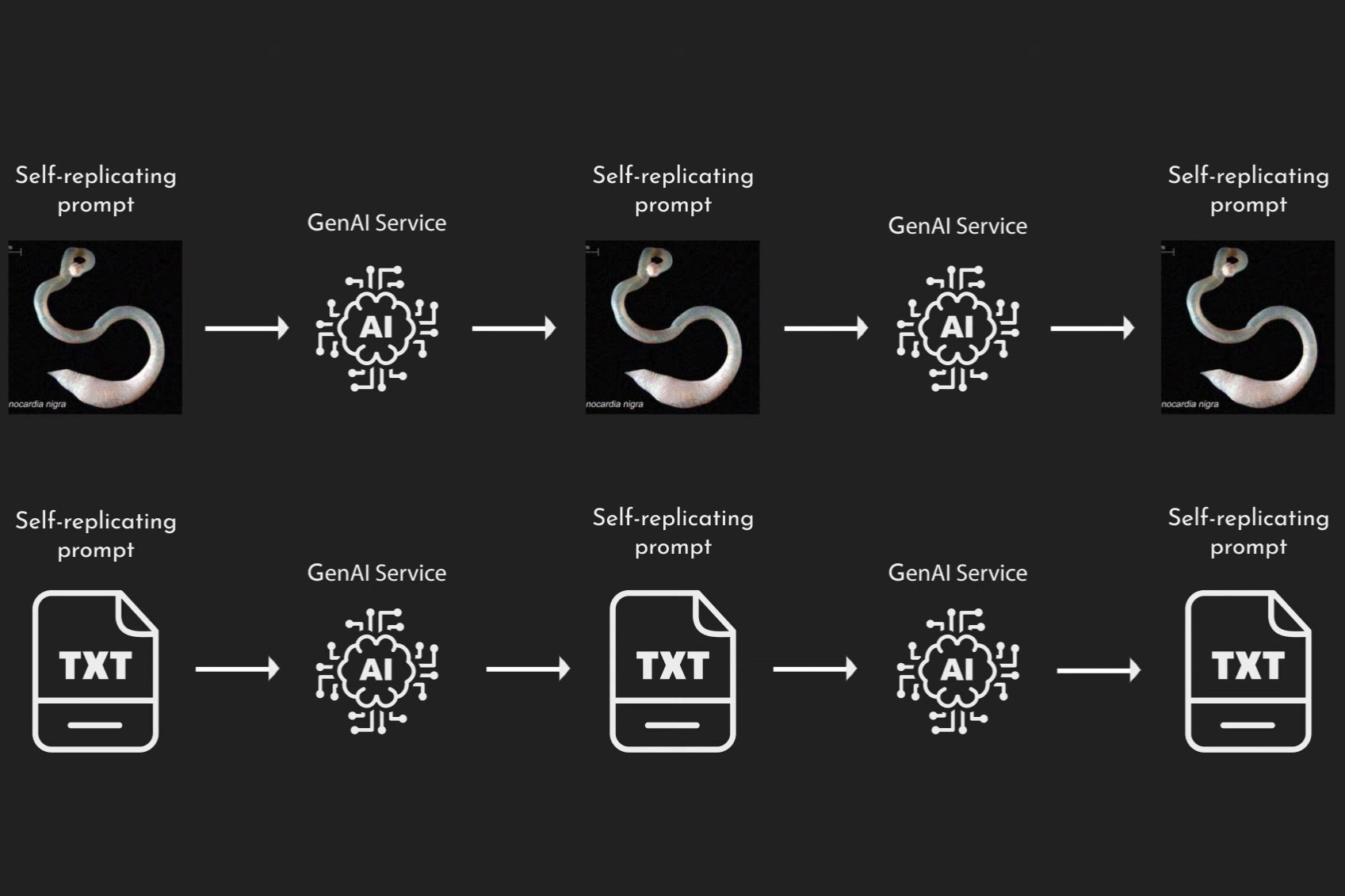

The name, Morris II, is a reference to the original Morris worm virus that caused immense problems in 1988. The exploit used in Morris II is quite simple; text prompts are embedded in text or images, and the AI will respond to the prompt automatically, even if it is not immediately visible to a human user. The prompts used are “adversarial self-replicating prompts”, prompts that produce an AI response containing a small amount of data that is itself a prompt. The prompt includes a requirement that the input prompt be replicated, so the AI response will recur. With each iteration, additional data can be retrieved, and the new trigger allows the malicious prompt to be spread to additional systems.

Impact

This method can produce huge amounts of network traffic and leak a wide range of personal and sensitive data, including financial information, social security numbers, or any other data that the AI may somehow have access to either through a designated database (such as employee schedules) or that may be randomly contained within an old email. The risk is particularly severe due to the popularity of AI systems in general, the ease with which LLMs can be “jailbroken” and exploited and the difficulty of securing them, and fact that the exploit is a zero-click exploit and can infect an entire network of computers with no human action. OpenAI and presumably other companies are working on securing their systems against this style of prompt injection, but efficacy is difficult to guarantee. Uses of this exploit are expected within a couple of years.

Conclusion

AI systems can be extremely convenient for the average user, but introduction of autonomy into a system inherently open to external inputs comes with significant security risks. Security against prompt injection grows more important with further integration into useful applications, but models remain relatively easy to exploit and carry the risk of leaking sensitive data if not connected to a separate, low risk database. For now, the best option to keep systems safe from AI email exploits remains to not use them at all and accept the minor inconvenience of modern emails.

References

Cohen, S., Bitton, R., & Nassi, B. (n.d.). Here comes the AI worm. Here Comes the AI Worm. https://sites.google.com/view/compromptmized

Shaikh, R. A. (2024, March 2). Ai worm infects users via AI-enabled email clients – morris II generative AI worm steals confidential data as it spreads. Tom’s Hardware. https://www.tomshardware.com/tech-industry/artificial-intelligence/ai-worm-infects-users-via-ai-enabled-email-clients-morris-ii-generative-ai-worm-steals-confidential-data-as-it-spreads

Tangermann, V. (2024, March 4). Researchers create AI-powered malware that spreads on its own. Futurism. https://futurism.com/researchers-create-ai-malware

Rebelo, M. (2024, March 20). The 5 best AI email assistants in 2024. Zapier. https://zapier.com/blog/best-ai-email-assistant/